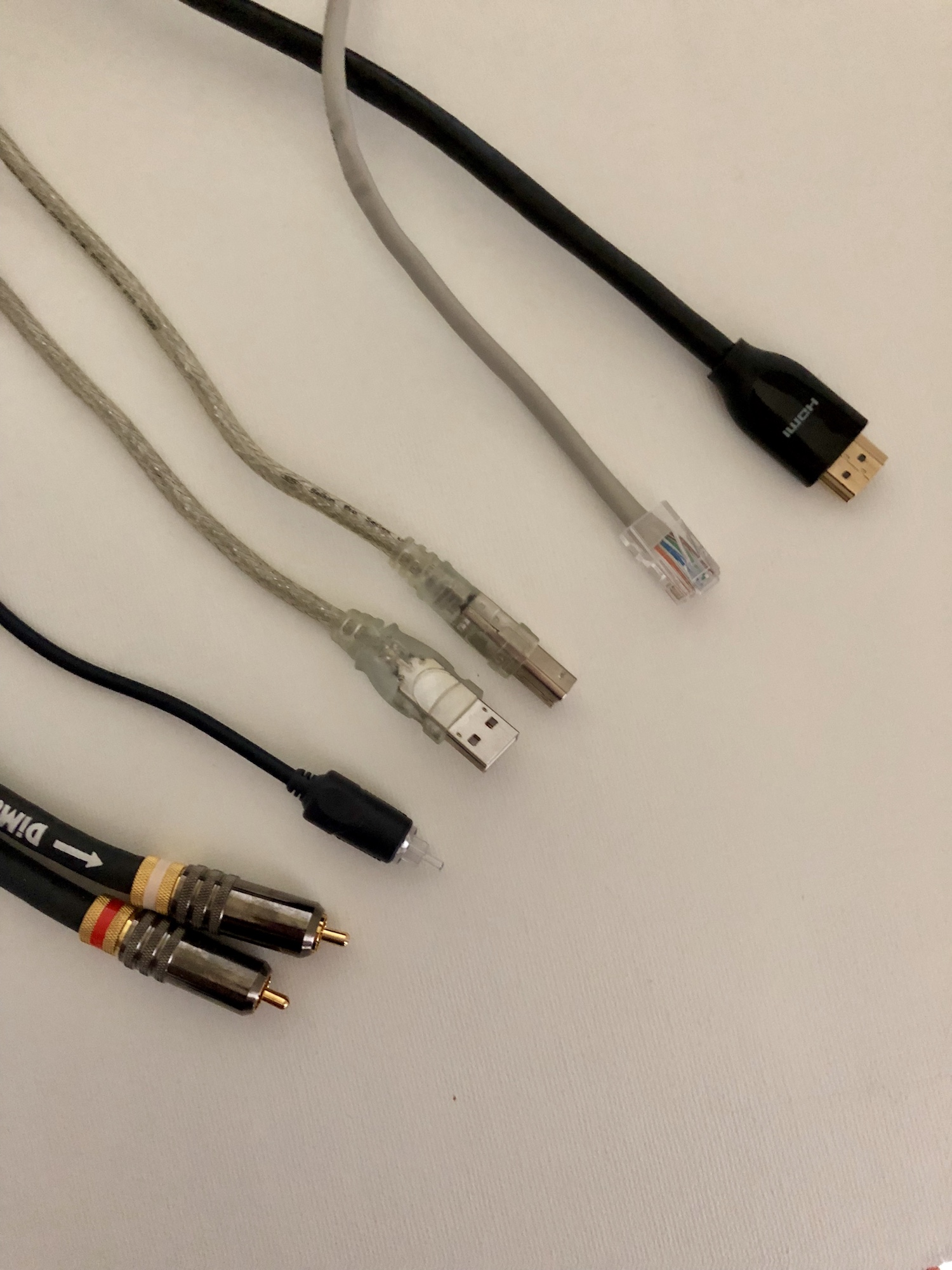

From Left to Right: A pair of Stereo Analog Audio RCA Interconnect cables, an Optical (S/PDIF) Digital Audio cable, a USB Digital Interconnect cable with a Type-A (thin rectangular) plug on one end and a Type-B (squarish) plug on the other, a CAT 5e Ethernet cable, and a Premium Certified HDMI cable.

One of the enduring mysteries of Home Theater is how to connect your gear together so that the audio and video you WANT to pass between devices will actually get there! It sometimes seems like every path is strewn with potholes.

Restrictions can be technical, historical, and even legal (content licensing prohibitions). In this post, I'll attempt to demystify the most common restrictions.

Let's start with some of the basic differences.

If you've read my previous posts on Digital Audio and Digital Video, the first difference should be pretty easy to grasp: Analog connections carry Analog Audio or Video -- never Digital. And Digital connections carry Digital Audio or Video -- never Analog.

So if someone asks you, "Why can't I get a Dolby Atmos Bitstream into my Audio/Video Receiver (AVR) using my multi-channel Analog Audio connections?", the answer should now be obvious. That Bitstream is Digital Audio, and you don't get Digital audio on Analog Audio connections. Or if someone asks, "How do I know if the DACs (Digital to Analog Converters) in my source device are doing a good job when I listen using HDMI Audio?", the answer should also be obvious. The output of the DACs is Analog Audio, and HDMI never carries Analog Audio. When you are listening to HDMI Audio, the DACs in your source device are not even in the signal path.

HISTORICAL NOTE: When Digital Video was new, a cabling standard called DVI was invented for it -- originally conceived to connect computers to their display monitors. DVI is the Video-only predecessor of today's HDMI cabling. In an effort to increase early adoption of DVI cabling, the standard allowed for BOTH Analog and Digital video in the same cable! However this was something of a cheat. The Analog and Digital video signals used separate wires, and separate pins in the plug ends, and in practice, a given cable was always used for just Analog or just Digital Video according to the two DVI-capable devices it was connecting.

Another basic difference is between Audio and Video "transmission" formats and Audio and Video "file" formats. File formats are used to store Audio and/or Video on a standalone hard drive, OR in your computer, OR in a NAS (Network Accessible Storage) device implementing a Server. Servers come in different types (in-home SMB and DLNA Servers for example), and may either be on your local network or out on the Internet, perhaps operated by some commercial service.

The audio and video content in file formats does not get played directly. The file must first be processed by some program which understands that type of File and which can extract the Audio and Video from it. The result of that processing is Audio and Video transmission formats such as LPCM audio and YCbCr video.

Networking connections (Wifi and Ethernet) typically carry file formats. The file itself is delivered to the receiving device and the processing of that file happens in the receiving device.

HDMI connections, on the other hand, carry transmission formats. That means if you are playing a file and want to connect to the next device via HDMI, there must be a program in the source device which can process that type of file and generate the audio and video transmission formats to go out on the HDMI connection. You can't just send the file itself over HDMI.

An MP4 file, for example, is one type of file format which can hold both audio and video. Suppose you have one of those on the hard drive of your computer. Your TV may know how to play MP4 files, and if so, you may be able to connect both your computer and your TV to your house network and have the TV play that file by accessing it on your computer. The TV processes the file and directly uses the resulting audio and video from it.

You may also have HDMI cabled between your computer and the TV. But if you want to play the MP4 file THAT way the Computer ITSELF must have a program which knows how to process (play) the MP4 file for HDMI output. What goes out on the HDMI connection to the TV is *NOT* the MP4 file itself. It is instead, perhaps, stereo LPCM digital audio and YCbCr digital video.

USB cabling is an odd duck in that it can be used BOTH ways. A USB cable can be used to connect a hard drive to a device. Used that way, the USB cable will be carrying files between the hard drive and the device.

A USB cable can ALSO be used to carry audio streams (transmission formats) from a computer to a standalone, Audio DAC (Digital to Analog Converter). Used THAT way the USB is carrying an audio transmission format -- such as Stereo LPCM Digital Audio. If the Audio you are playing is coming from a file on the computer, then some program on the computer must be used to play (process) that file and produce the audio transmission stream which will go out on the USB connection.

TECHNICAL NOTE: Networking connections (Wifi and Ethernet) can also be used to stream audio and video transmission formats directly -- a so called "Play Anywhere" setup within your house network. These are specialized setups, and I won't discuss them further in this post.

With those two, big differences out of the way, let's drill down and discuss the important limitations on Audio and Video transmission formats when carried over Digital cabling connections. These would include Optical/Coax connections and HDMI.

Optical and Coaxial cabling are used interchangeably to carry "S/PDIF" (Sony Philips Digital Interface) Digital Audio signals.

HISTORICAL NOTE: Coaxial cables were ALSO used to carry ANALOG Video in prior generations of hardware -- for example the 3-cable connections of "Component" Analog Video. But as mentioned in my Digital Video post, the industry has been deliberately eliminating Analog Video output from newer generations of gear, so I won't discuss that further in this post.

S/PDIF connections have important limitations on what they can carry. Some of this has to do with technical limitations on the transmitters and receivers at either end of the cable, but most of it has to do with the fact that S/PDIF connections do not come with Copy Protection! So the content owners (Studios) do not want their highest quality content to be carried on S/PDIF.

The limitations for S/PDIF Digital Audio connections are:

- No multi-channel LPCM -- only Stereo

- No Lossless Bitstreams -- only Lossy

- No output whatsoever from SACD music discs

- Reduced data rate audio from DVD-Audio music discs

TECHNICAL NOTE: SACD discs are sometimes authored as "Hybrid" discs which can also be played on regular, CD music disc players. The output of the lower quality, CD content on the Hybrid disc can be output on S/PDIF connections. However, when the disc is played in an SACD player, this will not happen automatically. You must first instruct the SACD player to play the lower quality CD content from that Hybrid disc.

So what happens on the S/PDIF Digital Audio outputs if you play a movie that has a multi-channel LPCM audio track, or a Lossless Bitstream audio track?

In the case of the LPCM track, you get a "Down-mix" of the multi-channel audio to Stereo.

In the case of the Lossless Bitstream audio track, you can only get the Lossy "Compatibility" track. Typically this would be a traditional, Lossy DD 5.1 or DTS 5.1 track as found on SD-DVD movie discs.

In the case of movies on Blu-ray or UHD discs, whenever the feature audio is a Lossless Bitstream -- i.e., Dolby TrueHD or DTS-HD MA, including the Atmos and DTS:X variants of those -- there must ALSO be a Compatibility track on the disc for just this purpose. The Compatibility track may not be shown as an option on the disc's audio selection menus, but it is there nonetheless. In the case of a DTS-HD MA Bitstream track, the Compatibility track is actually embedded inside that Bitstream content on the disc. In the case of a Dolby TrueHD track, the Compatibility track is a separate, "Associated" track on the disc. All of this happens automatically. So for example if you play a Blu-ray Lossless Bitstream track on a player that allows simultaneous output on its HDMI and S/PDIF connection, the HDMI connection will play the Lossless Bitstream track and the S/PDIF connection will play the Lossy Compatibility track.

Optical/Coax (S/PDIF) Connections may sound complicated, but at least there's only one VERSION of them to worry about!

The same can not be said for HDMI. HDMI connections have evolved over time to add features and formats. Each such change involves new hardware because, at the very least, the HDMI transmitter and receiver chips in the gear at each end of the cable have to be replaced.

In addition, the natural trend in HDMI is to INCREASE the bandwidth (data rate) the cable is expected to carry. This frequently means that older cables simply can't do the job when you upgrade your devices at either end of the cable. (This is a PARTICULAR nuisance for folks who have HDMI cable runs embedded inside their walls!)

All of these HDMI Version changes are supposed to be "Backwards Compatible", meaning that you can connect newer gear to older gear and older cabling, and it is supposed to still work -- up to the feature set of that older gear. And for the most part this has been kinda-sorta true.

The problems usually arise because the older devices were not well-programmed to protect themselves in the face of unknowable, future changes. So for example, during an HDMI Handshake, the source device asks the destination device what it can accept as possible formats. There was a point not too long ago where typical Cable and Satellite TV set top boxes failed willy-nilly when attached to newer gear. Why? Because the newer gear was sending them back a list LONGER than they were programed to handle!

In terms of the cables themselves, the biggest fiasco has been the recent introduction of 4K video, which, as it turns out, doesn't actually work on many of the cables originally sold as good for 4K video! See my post on Premium Certified HDMI Cables for more info on that.

But gotcha's like these aside, HDMI has the unique joy of being the Go To Cabling for Home Theater. That is, HDMI cabling is the cabling that's supposed to be able to handle EVERYTHING Digital. If you've got the right gear for 4K Video with HDR and WCG, HDMI cabling can handle it. If you've got the right gear for 3D Video, HDMI cabling can handle it. Want high bit-rate, multi-channel, audiophile quality Digital Audio? HDMI cabling can handle it. You might need to buy new cables, but they are still "HDMI"!

HOWEVER, there is one limitation that has to be mentioned. And that has to do with the fact that HDMI Audio is always embedded INSIDE HDMI Video.

Always....

In the early days of TV, pauses were built into the transmission of the video signal. At the end of each line of video there was a pause to allow the TV to get set to begin the next line of video. And at the end of the last line of video of a given image, there was a much longer pause to allow the TV to get set to begin the first line of the next image.

These pauses were called "blanking intervals".

And when Digital Video came to be, these pauses were PRESERVED in the Digital Video formats. So there is a horizontal blanking interval at the end of each line, and a vertical blanking interval at the end of each image. In the case of Digital Video, these blanking intervals still involve data being transmitted. I.e, the data just keeps flowing in the video stream even though it is not being used.

The data flows along in steps called Pixel Clock Times, and the number of Pixel Clock Times per second is a function of the number of lines of video, the number of pixels per line, and the number of images per second. The included Horizontal and Vertical blanking intervals are, then, just a set percentage of the total number of Pixel Clocks per frame of video.

And so, the higher the video resolution, the more Pixel Clocks there are in the blanking intervals.

And THIS is important because the blanking intervals are where the Digital Audio data is stashed! (There are also some other things stashed in there like Closed Captions text, but the Digital Audio takes up the bulk of it.)

When the Digital Video formats were mapped out, the world was still in the era of Standard Definition Video -- 480i or 480p -- and the formats were conceived in parallel with the Digital Audio formats of that era. And so the Digital Audio capacity of the SD Video, Digital Video formats was based around Stereo LPCM audio and the then-new, Lossy DD 5.1 and DTS 5.1 Bitstream formats.

Which means -- that's all that will fit!

That is, if you send HDMI video at a Resolution less than 720p -- which would include 480i/480p in the US, for example, or 576i/576p in Europe -- the Digital Audio inside that Digital Video stream will be limited to Stereo LPCM (at no more than 48khz, 16-bit size) or Lossy Dolby Digital (DD) 5.1 or DTS 5.1 Bitstream tracks.

If you want to send multi-channel LPCM, or a Lossless Bitstream format such as Dolby TrueHD, DTS-HD MA, or the Atmos and DTS:X variants of those, you MUST use a Video Resolution of 720p or higher so there's enough space inside the video to carry those higher bandwidth audio formats.

What happens if you try to play, say, a Dolby TrueHD Bitstream track in video that is being sent out on HDMI at, say, 480p/60? Remember that Compatibility track I mentioned in the S/PDIF cabling section? Yep, that's what you get!

Content Licensing also plays an important role in limiting what can be sent on HDMI cabling.

In the case of HDMI this is part and parcel of the HDCP Copy Protection version implemented by the set of gear in your particular chain of HDMI equipment. (As mentioned in a previous post, HDMI is an "end to end" protocol, and so the Copy Protection check -- which is managed by the source device at the start of the chain -- has to be satisfied through all the devices all the way to the end of the signal chain.)

What you will get if the Copy Protection check fails depends on whether any LESSER version of HDCP can pass the check in your chain of gear. If the HDCP check fails altogether you will get a muted signal -- no Audio and, usually, a black screen of video. (This most frequently happens if you still need to upgrade your HDMI cables to handle new, higher bandwidth formats coming from your new gear. HDMI HDCP is finicky. It LIKES to fail. And marginal cabling is a ready source of such failures.)

But depending on the circumstances, passing the check for a lesser version of HDCP may mean you can get a reduced quality version of the Video. For example, UHD (4K) playback gear is designed to be compatible with prior, 1080p, AVRs and TVs, so long as they pass the HDCP check for that OLDER level of content. Which means you can play a UHD/HDR Blu-ray disc into a 1080p TV and get 1080p video in Standard Dynamic range. The down-conversion of both Resolution and Dynamic Range happens automatically. The Color Space also gets converted automatically from BT.2020 to BT.709. See my post on Digital Video for details.

So there you have it. Technology, History, and Licensing are the culprits! Hopefully this post will help you minimize your sleuthing for solutions!

--Bob